MemoQuery: Privacy-first AI

AI is transforming how we work—but at a hidden cost: your data. Most AI tools today require sending sensitive documents, emails, and plans to remote servers owned by third parties. MemoQuery takes a different path: a fully private, local AI engine that lives where your data lives—on your device. Essential for many sectors, preferable for all. Fully integrated with MemoConductor and MemoGo, it forms the foundation of a whole new raft of AI-native applications.

In the rush to embrace artificial intelligence, a critical question has been largely overlooked: Where does your data go? The current paradigm is built around massive, cloud-based LLMs that require you to send your most sensitive information to a third party. For many organisations, particularly in sectors like law, finance, and healthcare, this is a non-starter. For individuals, it's a Faustian bargain: convenience in exchange for privacy.

And privacy is not the only problem. While LLMs are a revolutionary leap forward, their technological basis has significant shortcomings that are often masked by impressive demonstrations. A useful analogy is to think of LLMs as a "lossy JPEG" of the internet. They compress the vastness of human knowledge into a statistical model, but this process comes with artefacts, or "hallucinations." They are brilliant at generating grammatically correct English—the digital equivalent of typing out Shakespeare with a trillion monkeys—but they lack a grounded model of reality. This is why an LLM might correctly extract some data from a text but then literally make up other data.

Accuracy is non-negotiable

A great example of this is legal discovery. Frequently in both civil and criminal cases, lawyers may have to trawl through tens of thousands of documents and emails. This process often takes paralegals weeks of manual review, answering questions such as “who knew what, when?”, “what was X’s thinking at this time”, “which content is privileged?”. It’s perfect for LLMs to cut down work that could take weeks into hours. But there are issues. First, accuracy is non-negotiable. Everything has to be cross-checked against source. Second, the data is far too sensitive to share with a 3rd Party Cloud provider, especially one possibly residing in a different jurisdiction.

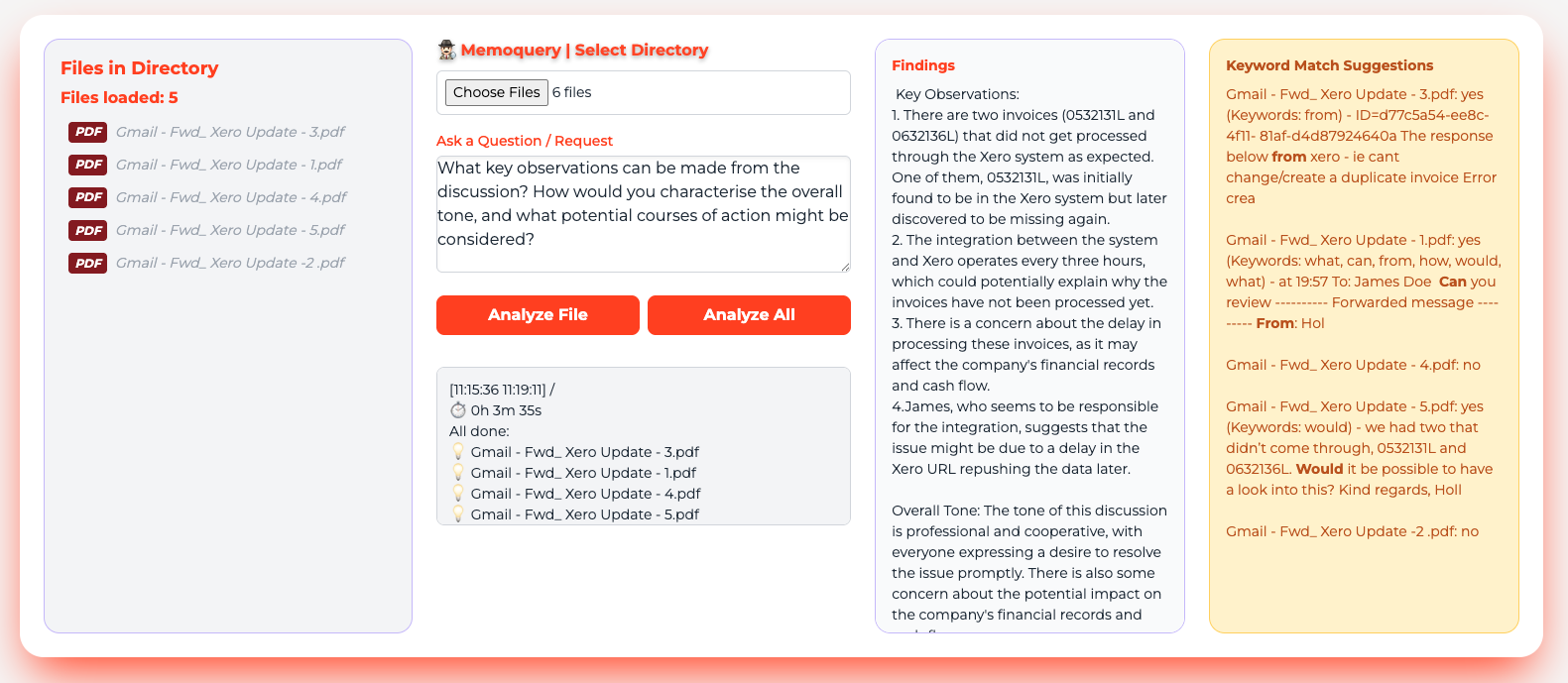

MemoQuery solves this fundamental problem by bringing the power of AI to your data, not the other way around. Built to run locally on a user's machine, MemoQuery ensures that your documents, conversations, and personal data never leave your device. This isn’t just a feature; it's a core architectural principle that empowers users and businesses with total data sovereignty. MemoQuery’s true power is unlocked when combined with the MemoConductor, a resilient, distributed data layer, and MemoGo, a declarative, data-driven platform for building applications. MemoQuery completes this trifecta by adding a powerful, local AI engine that can reason over this data. For example, a paralegal using MemoQuery could isolate privileged communications, extract timelines, and summarise conversations—all offline and fully auditable.

This synergy enables the creation of a new generation of "AI-native" applications that are fundamentally different from today’s AI tools:

Privacy by Design

Because MemoQuery operates on local data, every AI-driven function—from summarisation and analysis to content generation—is performed without ever exposing your sensitive information to an external server. Your data remains in your control, always.

Decentralised Intelligence

MemoQuery breaks free from the centralised, monolithic AI model. Instead of relying on a single, massive brain in the cloud, each MemoQuery instance is an intelligent agent operating on a local, distributed copy of the data. This means an AI-native application built on MemoQuery is as resilient and available as the MemoConductor data it's built on, with no reliance on an internet connection for core functionality.

Flexible installation

Small scale models up to 7B parameters can be run directly on the laptop, and larger ones using on-premise servers, fully under your control. As newer distilled LLM’s appear and laptops increase in power, MemoGo’s open architecture can take full advantage of these, whilst still maintaining rules-based controls for accuracy.

Configuration over Prompts

Just as MemoGo uses structured JSON for application logic, MemoQuery leverages this precision for its AI tasks. Instead of vague, imprecise natural language prompts, developers can use structured queries that precisely instruct the LLM, reducing ambiguity and ensuring consistent, reliable results. This approach, where AI logic is defined as data, allows for AI-driven functionality to be integrated directly into the application's configuration, making it as modular and adaptable as the rest of the system.

MemoQuery isn’t just a tool, it’s a step toward reclaiming control over our data. It’s not just about a local LLM; it's about a complete platform for building secure, private, and resilient applications that are intelligent by their very nature. By combining a local AI engine with a distributed database and a declarative development platform, we are creating the tools needed for a future where AI is a force for empowerment, not a threat to privacy.

If you’re building in legal, finance, healthcare, or simply just care about privacy, MemoQuery is how we build AI that serves us, not the other way around.