Beyond the Cloud

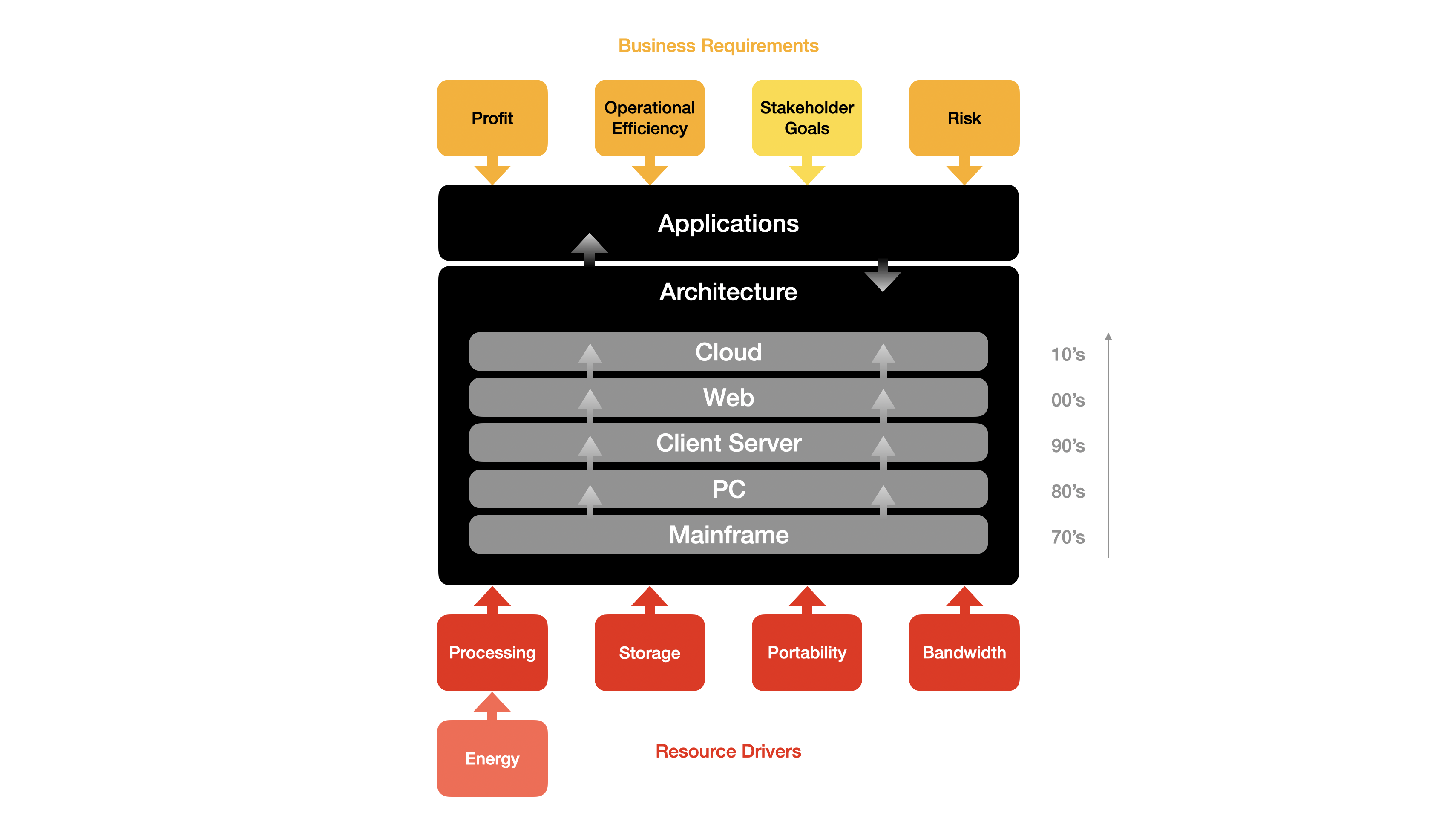

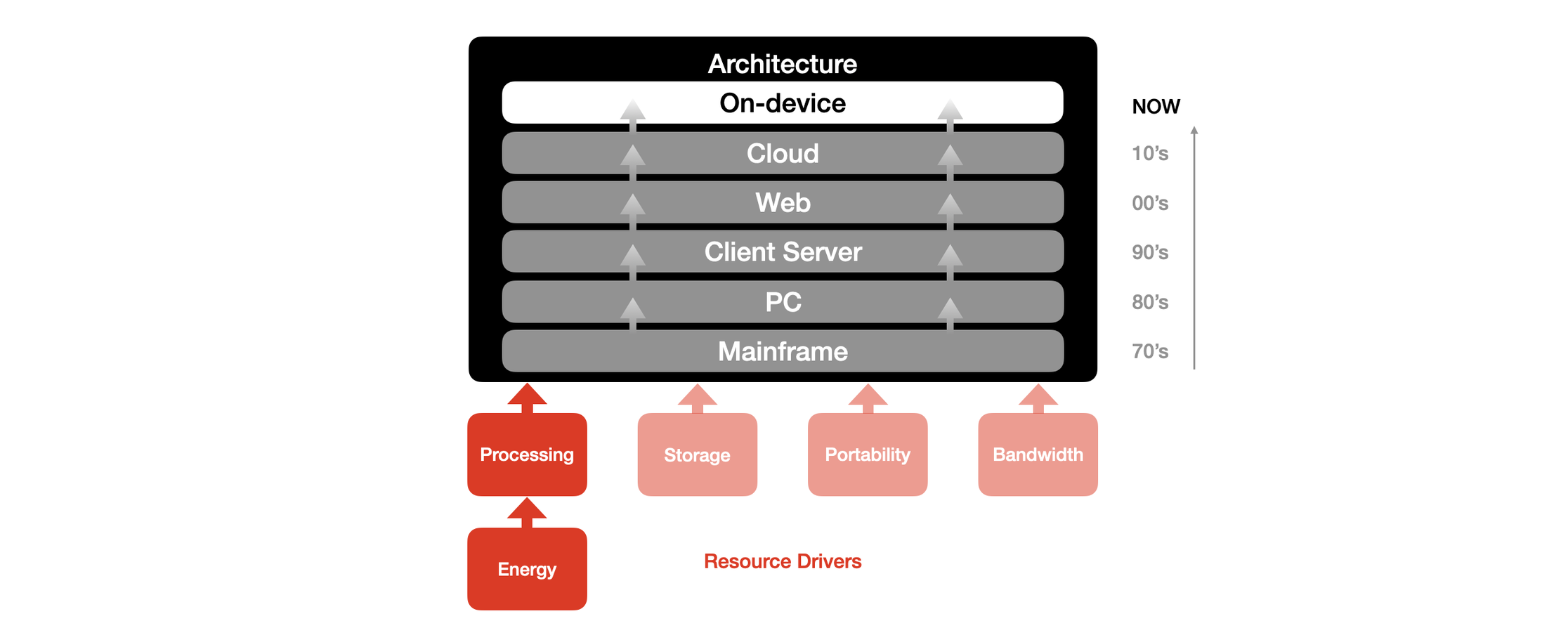

Every 10-20 years or so, there is a major platform shift in IT. One that sweeps away incumbents, just as Siebel replaced IBM CICS and in turn Salesforce replaced Siebel within CRM. I believe circumstances are coming together with a change in both business goals and resource drivers that will drive an architecture shift, one where on-device computing will begin to displace the Cloud.

There is no one Moore’s Law

There are at least four Moore’s laws, and depending on your specialisation, probably more. The original Moore’s law was that the number of transistors on a single chip doubled every two years, a form of exponential growth and a proxy for processing power. More a rule of thumb than a law of Physics, it remains a powerful reminder of the advancing pace of IT.

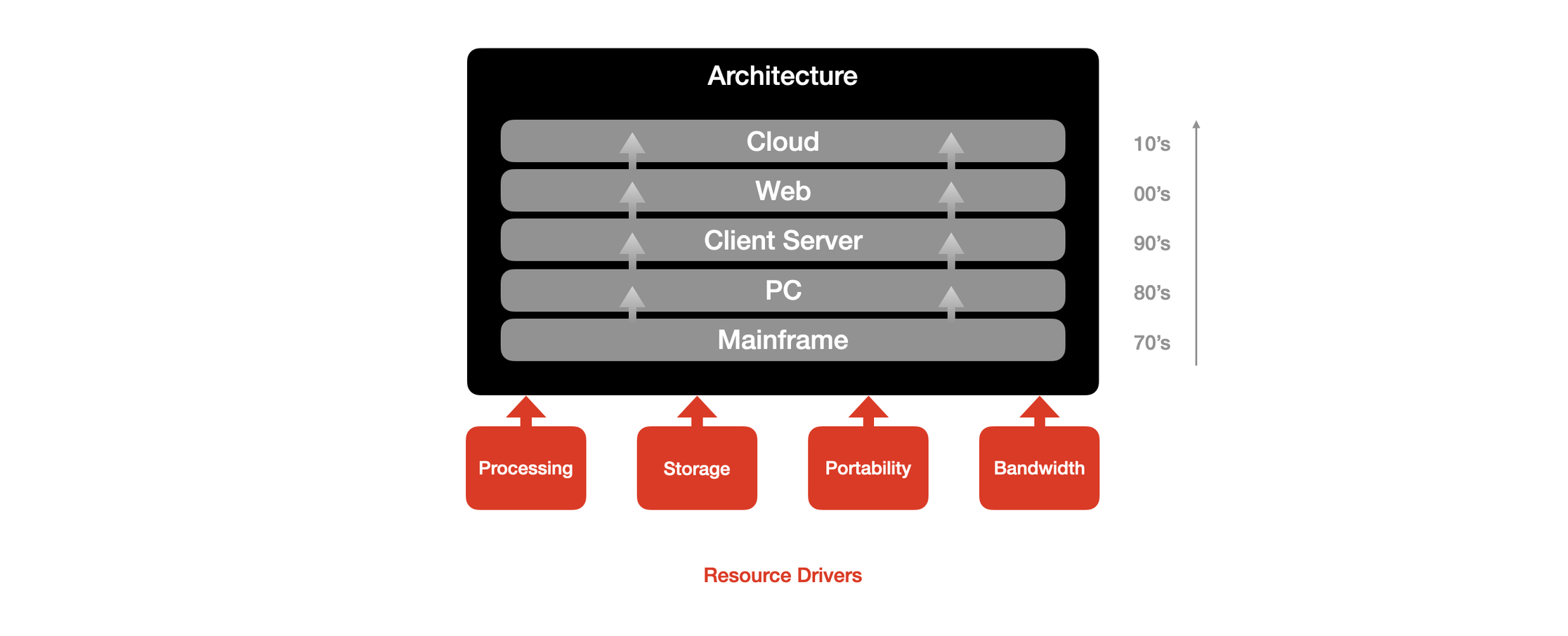

But computing is not just about processing power. Equally important are storage, bandwidth and miniaturisation/portability. All are subject to their own Moore’s laws, all advancing at different rates. This matters because whilst it’s true to say every part of a computer gets better every day, the different parts improve at different rates. Bottlenecks move, and architectures will change to optimise this.

The early days of business computing were dominated by the mainframe. Mini-computers which came along later were more affordable models, but still fundamentally based on a sharing a single large expensive computing resource with multiple users on low cost dumb terminals. It was a market led by IBM.

Miniaturisation takes the lead...

The first big shift occurred in the 80’s with the rise of personal computing. A two pronged approach with both games consoles for the consumer and personal computers with word processing and spreadsheets as killer apps for business professionals, made possible by the miniaturisation of computers. The mainframe remained, but the dumb terminal now became another application on the PC - a terminal emulator. Microsoft was the dominant player.

Then Processing and Networking…

As internal corporate networks started to be practical with then new protocols like ethernet, the dominant architecture in the 90’s moved to client server. In this model the “mainframe” remained, but stripped back to be essentially just a database, and the business logic and UI display. It enabled much better windows based UI’s, as well as much cheaper central servers. Machine rooms full of water cooled mainframes were replaced by store rooms of rack based servers, reducing costs and opening up access, in the process making firms like Oracle supreme.

Bandwidth…

The second half of the 90’s and the new millennium saw the Internet explode. Now the network was worldwide and suddenly everyone was on the network as telecommunications expanded. For consumers it meant an explosion of readily available content and revolutionised shopping. For businesses it meant a move to multi-tenancy Software-as-a-Service (SaaS) based systems that slashed both vendor support costs and end user costs in turn.

Miniaturisation (again)…

The release of the iPhone in 2007 heralded a gear change to even more networked access as phones became ever-present, always-on computers. Increasing miniaturisation meant to was practical to run applications in your pocket for the first time. In turn this drove even more emphasis on connectivity and a many-to-many cloud based architecture that is the norm today.

Of course, none of these architecture shifts ever fully supplant the past. Mainframes continue to dominate many areas. Your modern banking app on your iPhone will still be pulling your transactions from a water-cooled mainframe. But these shifts do change the focus.

And now, Processing (again)

Today we have supercomputers on our desks and pockets, but largely used as mere UI wrappers that wait 10 seconds or more communicating with a central server as application and data bloat have eaten away the last two decades improvements in bandwidth. This has placed more demands on data centres, which coupled with increased use of AI has led to the situation where Ireland had to refuse permission for Google to expand their data centre due to lack of grid capacity. Energy has become the new bottleneck.

With overloaded networks and data centres, but under-utilised personal devices, the time is ripe for a new architecture: On-device computing.

On-device Computing

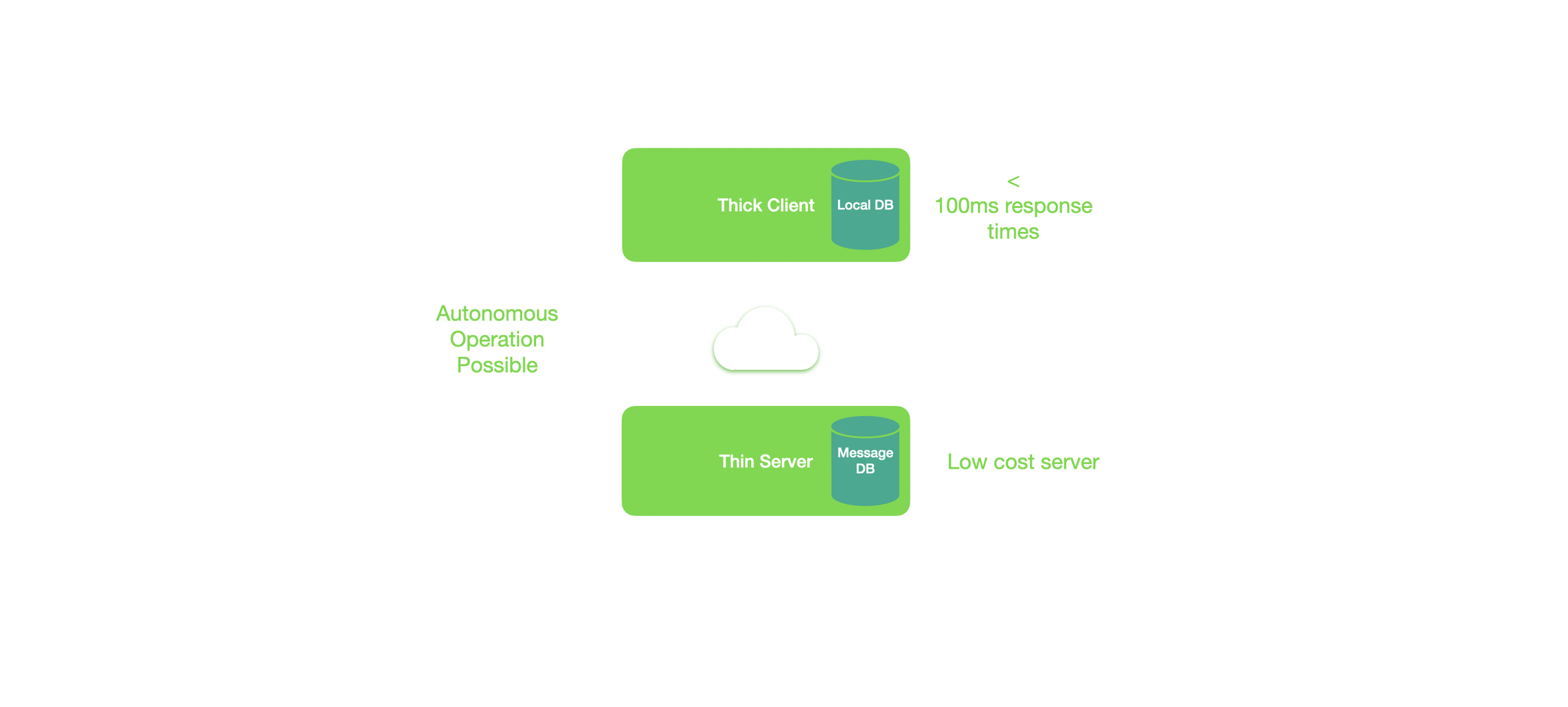

Whilst on-device computing implies local data, it does not completely eschew communications. Indeed, it is the communications aspect that represents the most novel part of the architecture.

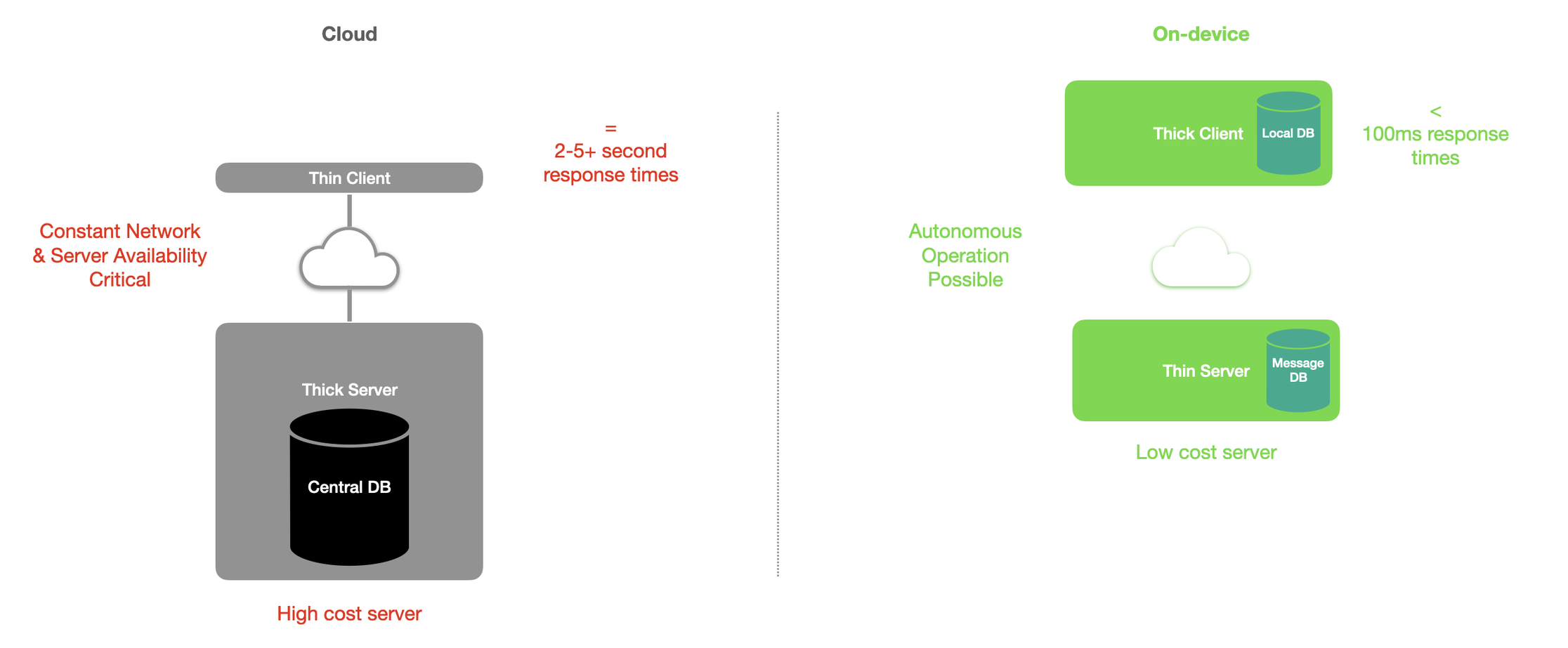

In a cloud-based system, all data is managed as a single logical database (even if physically split or shared across multiple systems). Clients send and retrieve data as enitre records. This fact alone is expensive in bandwidth when perhaps only a single field, such as a contact’s phone number is updated.

By contrast, the heart of on-device communications is a message-based database. In this case, only the contact’s actual phone number is transmitted as message. Instead of a single hosted database, there are multiple local databases. The central message database can be much smaller as a consequence, and use much more modest processing power.

A key feature of any database is enabling multiple users to make updates without writing over each other. Typically this is implemented by temporarily locking the database for each user. This is expensive. A message based database though is inherently more robust to multiple overwrites as only the delta is transmitted. Its a key requirement all of this works according to the business requirements, including data consistency, availability, and integrity.

Decoupling the need for synchronous updates also makes on-device architectures a natural fit with asynchronous processing and serverless computing.

There are some circumstances where a traditional central database is still needed. One simple example is creating a unique sequential PO number. But no architecture shift every fully replaced existing approaches and shouldn’t be expected to. Instead, the centre of gravity flows to a more efficient use of resources where indeed it is more efficient.

The message database is quite a sophisticated piece of kit needing to enable disconnected operation, automated reconnection, backups and much more. But the technology is now becoming available.

Changing Business Environment

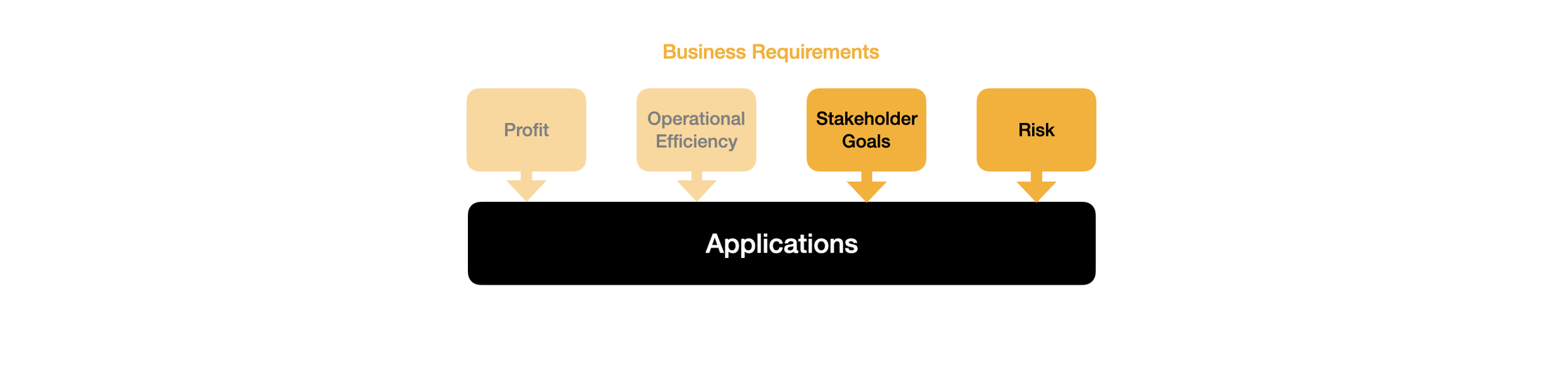

Business goals do change in their relative importance, and are what drive applications and in turn, the architecture to support these.

Profit

This is of course eternal. Ultimately all IT systems incur costs. These have to be outweighed by their benefits. They have to earn their keep. Even if a new system substantially does little better than existing systems, if it is cheaper, then there is a business case based on profitability to change.

Operational Efficiency

Profit is how much money you make in a certain time frame. It is inherently a rate. But also important is how much money (or other assets) are needed to generate this. Software can help reduce inventories and increase cashflow. Even in the absence of any increase in profit, this is valuable.

Organisations often prefer to rent space rather than buy to avoid tying up capital that could be more profitably employed elsewhere. They also are able to scale expenditure with the business – a key capability if profitability is to be maintained. Financially speaking, the move to SaaS computing not only reduced cost to improve the bottom line on the income statement, but also converted fixed costs to variable costs.

Stakeholder Goals

Businesses are part of society. They benefit from rule of law, infrastructure and limited liability. Society expects some things in return. These though, do change. A general concern about both marketing tactics and attitude to consumer data led to regulatory action such as CAN-SPAM and GDPR. Environmental concerns led to CSR. Today the focus is on AI regulation.

Risk

There is a flip side to operational efficiency and that is risk. Taking out every ounce of slack in a business process makes sense right up until the point that process gets disrupted. Large inventories start to look a lot better value when the Straits of Hormuz are blockaded.

Historically business risk meant physical business risk. But now we need to consider IT risk too. The US is the dominant supplier of IT, as well as being roughly 50% of the worldwide market. Pretty much every non-US company has critical business systems hosted on US servers. Even if not located in the US, then run by US companies.

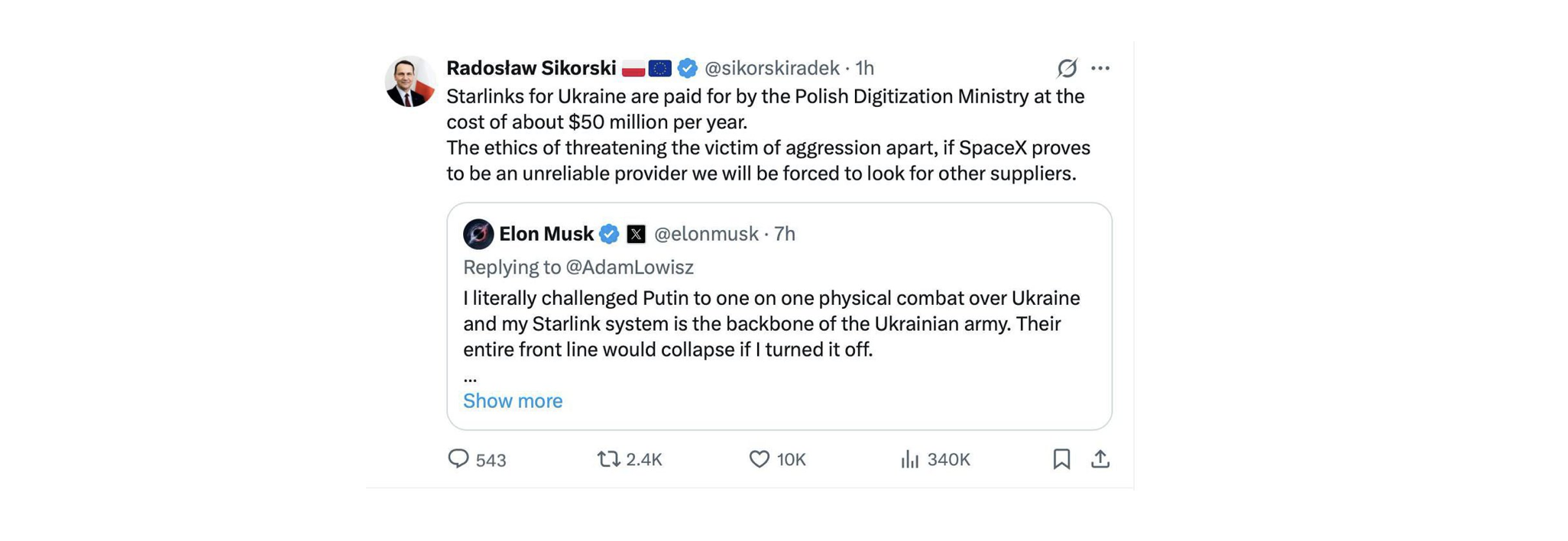

Elon Musk in March 2025 underlined this during a X/twitter spat with Poland. Whilst the focus was Starlink, clearly the threat would apply to any technology dependent on continued US support. Secretary of State Marco Rubio, in attempting to defuse things, actually made it worse by saying the US would never cut off support to US software. This was received as well as when a bank CEO “reassures” customers they are fully liquid and there is no reason to withdraw your cash.

At the same time, the US administration was imposing punitive tariffs on its trading partners and in comparison to the talk of annexing Greenland and Canada was the realisation both how easy it would be to execute and how damaging any interruption to IT systems would be. If your US SaaS/cloud provider declared you persona non grata, your options would be limited, ugly, and time-boxed.

The genie is out of the bottle and now “data sovereignty” is a search term. To paraphrase the crypto bros, “not your device, not your data”.

Outside of any geopolitical concerns is the realisation that giving companies like OpenAI, Meta and Google access to all of your company’s internal meetings, documents and emails with an AI that can read them in bulk could possibly backfire

In and of itself, repatriating data centres and systems to local control doesn’t mean an architecture shift, but it does create a review point, an opportunity to do things better. And a focus on resilience absolutely pushes the idea of on-device computing. The scene is set.

Own the Power of Now

Instantaneous Response

A key part of the UX is response time. Extra clicks aren’t quite the overhead if they are instantaneous. Conversely, the most brilliant UI in the world is just a static piece of graphics design if you have to wait 5 seconds for a page load. Too many SaaS apps are too slow.

Imagine if every time you pressed return, instead of waiting 5s, you had an instant response. How many times do you do this a day? A hundred? Two hundred? Just ten minutes a day is almost an hour a week. That has value.

Secure Privacy

There are many examples where absolute privacy is essential. One example is legal discovery. Clearly LLMs have much to offer, but sending up a cache of emails to ChatGPT would be madness and now locally running LLMs are a practical alternative. On-device inference is now becoming easier with tools like Ollama, and Apple’s recent WWDC exposed developer API’s to inference capabilities.

An on-device system can be entirely local. This is key, and it means no data of any kind ever leaves the laptop. It can be completely air-gapped (indeed actually physically disconnected from the internet). As such it is suitable for managing large caches of discovery files in both commercial and criminal disputes where sensitivity is paramount. The system is completely secure by design.

Genuine Sustainability

Energy consumption has now become the main determinant of building data centres. People are already protesting about the environmental impact of data centres including water consumption. At the same time, your laptop is using its supercomputer power to do nothing more than show a Netflix movie or display the UI of a SaaS app. This is unsustainable. There is a better way.

Limit Risk

Overseas cloud providers can no longer be counted upon to always be there. The risk is no longer primarily technical but commercial/political. We saw the impact the Crowdstrike failure had, and that was just one vendor accident. An intentional act to limit someone’s access to their SaaS based systems could cripple a business within hours. If the EU/UK want to impose a digital services tax on Amazon et al, why wouldn’t the US impose a punitive tax on EU/UK customers for US SaaS? If the EU bans X, would the US ban EU customers of SalesForce?

Cyber attacks are also relevant. Although it is entirely possible for domain joined PCs to be wiped/encrypted remotely in a cyber attack, the majority of ransomware attacks are just in the data centre. And even with an attack affecting local devices, it would take only one to be disconnected and survive to rebuild the others.

Another example is in emergency response situations. It’s not uncommon for communications networks to become saturated or even fail. On-device architectures means remote crews have access to their data at all times, regardless of the external communications or electromagnetic environment including even jamming in hostile situations.

Lower Costs

A significant cost for SaaS application vendors is the data centre. Powering large databases takes money. Cut that, and those savings can be passed on directly to the end users. In a competitive market this will happen. Existing SaaS vendors will just get priced out. Centralised LLM vendors will need another way to support their billion dollar burn rates when most of what they can do can be replicated locally at effectively no cost.